Kinect

After Microsoft released the Kinect sensor a few years ago, many of the things that were incredibly difficult to do with normal camera tracking became much easier.

What can you do with it?

Use the Depth Image

Create point clouds and volumetric graphics:

- Use point cloud data in your programming environment (like Processing)

- Z-Vector – A VJing tool that uses the Kinect camera

- RGBD Toolkit – Combine HD DSLR camera to the depth image

- Skanect – 3d scan objects (or yourself)

Depth Tracking

The Depth image can be used for tracking purposes just like we saw in the last lecture with other cameras. We can do thresholding, blob tracking etc.

- TuioKinect – Define a certain area of depth that you want to track

Tracking Humans

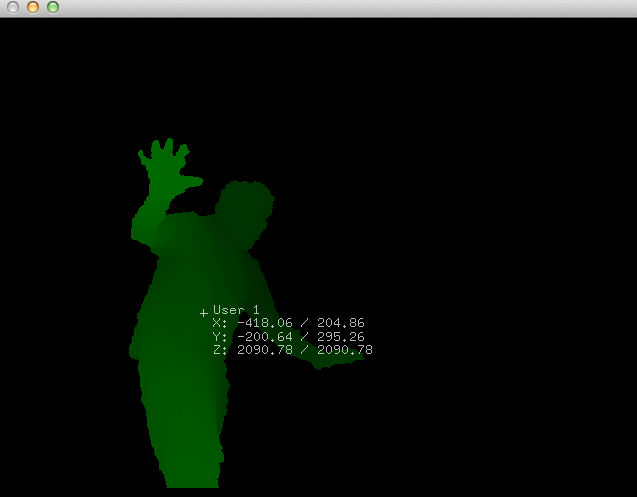

When the Kinect (and other depth cameras) are combined with some clever software, you can pretty easily track human beings in a space. Or at least much easier than what it was just a couple of years ago.

You can either track the user (the overall position of a person)…

…or you can track the skeleton (different joints of the body: head, neck, hands, knees, feet etc.)

There are two main approaches in doing the tracking (code-wise).

- OpenNI – An open-source SDK for depth cameras made by the company that developed the original Kinect hardware

- Kinect for Windows – SDK developed by Microsoft. Only works on Windows

How about Processing?

There is a tutorial available about the video above.

1) You can use the SimpleOpenNI library.

- Installation instructions

- Open some of the examples that come with the library (File–>Examples)

- Or download these small examples that I made. They are a little bit simpler.

2) You can use some other software that does the tracking and send the data to Processing or other OSC compatible software.

- OpenNI2OSC – A little software made by me (Matti). Sends the user data and the joint data on port 12345. Works with up to 5 people. Doesn’t need calibration pose.

- KinectA – Skeleton tracking, hand tracking, object tracking. Has an actual interface

- Synapse – Another OSC sender. Works only with one person. Needs calibration pose.

- OSCeleton – A pain to set up, but works very well.

- NI Mate – Made by a Finnish company. Quite easy to use, integrated Processing support, OSC, MIDI… But the full version is not free.

Leap Motion

The Kinect is good for bigger motions in a space, but t’s not accurate enough to detect subtle motions of your fingers. Leap Motion might be more useful for finer details.

Once again a Processing library exists for the Leap Motion. Or actually there’s at least three of them. They will all do pretty much the same thing so try one of them and see the examples on how to use them.